Professor Andy Cockburn

Turbulent touch

Touch screens are everywhere. They’re light-weight, easy to modify, and can be intuitively appealing to use.

But the next time you’re coming in to land at Wellington airport with the raging breeze rolling and bouncing off the surrounding hills, consider whether you’d want your pilot to be controlling the aircraft with a touchscreen.

SfTI Seed researcher Professor Andy Cockburn from the University of Canterbury is collaborating with Airbus to test a new way of giving people stability when they’re using touch screens in bouncy environments.

The applications for Andy’s approach are endless – aircraft, cars, motorbikes, tractors – any vehicle in which the knobs, buttons and dials of old are being upgraded by technologists to a touch screen-style display.

“It’s a lot cheaper to modify a touch screen user interface when you want to update a system than start reconfiguring and rewiring hardware, especially in a cockpit,” Andy says.

“In an unstable environment, it can be hard to accurately control small targets on a touch screen display – like the speed controls for the windscreen wipers on a Tesla.

“When you watch people using vehicle touch screens in vibrating environments, they’ll naturally do things like grip onto the outside of the screen or the surrounding mount with their thumb or fingers to anchor their hand, so it moves in unison with the display.

“But if the touchscreen is large, you can’t do that for most controls on the display because your hand can’t stretch from the surrounding mount to all the points on the screen, so there needs to be another way to stabilise.”

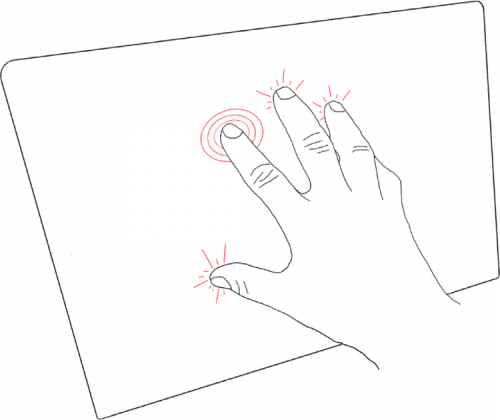

To solve that, Andy and his team have invented a technique called brace touch in which the user can steady their hand anywhere on a touch-screen with four or more points of contact (such as fingers and thumb), and then operate the target control with an additional selection gesture that the software can recognise. The software can discriminate between the fingers and thumb steadying the hand, and the additional gesture.

Andy says the team trialled three options for how the additional selection gesture could be recognised – double tap, dwell press and force press. They put the testers in both stable and bumpy environments to see how each of the three options performed.

With double tap the user taps the screen twice with their free finger within a given time frame, with dwell press, they touch the target and leave their finger in place for an extended period, and with force press they push more firmly on the target.

“The double tap just knocks the socks of the others,” Andy says.

“With the dwell press, people didn’t want to be waiting the extra time, and the force press created too much noise for the system to discriminate between the force of the stabilising hand, and the force of the intentional press. There was just too much opportunity for things to go wrong.”

In a collaboration with Airbus, Andy and his team have also trialled brace touch on a touch screen version of the A350’s Auxiliary Power Unit FIRE controls, located on the console above the pilot’s head. The bright red physical button triggers the aircraft’s engine fire suppression system. It’s covered by a plastic guard, which the pilot lifts to get access to the FIRE button.

In Andy’s trial, the team generated an identical looking touch screen version, in which the ‘guard’ was removed by an initial double tap of the touch screen FIRE button, and the suppression system was activated by a second double tap. Testers wore a body harness, were tethered to the ceiling and used a joy stick to keep the ‘aircraft’ in straight and level flight, while a motion platform bounced around simulating turbulence.

Andy says the error rate with the brace touch, double tap combination was very low in all test environments. When testers were told to use their choice of either a single finger to operate a control on screen (unbraced), or the brace touch method, about three quarters chose to brace in vibrating conditions.

“It’s been successful, and we’re pretty happy that brace touch is the right way to go.”

Andy says the next steps will be precise modelling of user behaviour to iron out any bugs in the system, and to work on using brace touch with other common touch screen movements, such as sliding and pinching. The team are also studying ways to help people use touch screens with less time spent looking at the touch screen and more spent focusing on the primary task (such as operating the vehicle).

“We want to find that sweet spot where people can accurately use their touch screen without having to pay a lot of attention to it.”

The researchers joining Andy on this project are Carl Gutwin and Catherine Trask at the University of Saskatchewan, Phillipe Palanque at the Universite Toulouse III Paul Sabatier, and Sarmad Soomro, Don Clucas, Simon Hoermann, Kien Tran Pham Thai and Dion Wolley at the University of Canterbury.