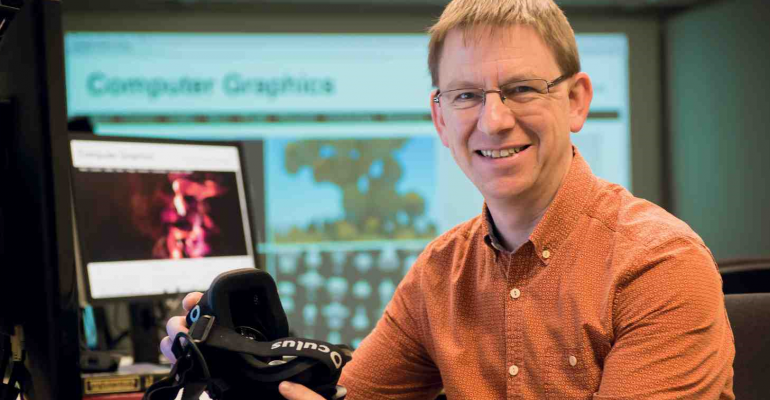

Professor Neil Dodgson

Augmenting reality to the horizon and back

Augmented reality, where a computer-generated image is overlaid onto what’s really there by a virtual reality headset, works pretty well in a small room.

But take it outside and everything’s too bright and too far away – the cameras can’t sync to the real world; the images start jittering and the software has a meltdown.

All that glitching and jogging around is nauseating for the user as they move their head, so current headsets, like the Microsoft HoloLens and MagicLeap One, are pretty much stuck to being used indoors.

SfTI researcher Professor Neil Dodgson of Victoria University of Wellington says it’s only a matter of time, perhaps five years, before camera and headset technology is so good that augmented reality will blossom into an everyday tool used in all kinds of educational, business, town planning and tourism situations – and he and his team are hard at work on the computer science end of the problem of getting them to work outdoors.

“You could see what your city looked like before the buildings were there, and watch as the first Māori settlers arrive, or witness what happened to the landscape during an earthquake hundreds of years ago by putting on a headset and standing outside looking at the site you’re interested in,” Neil says.

“You could watch as the first Māori settlers arrive.”

“New Zealand has a real advantage here because most of our history is recent, so we still have photographic records of many things that have now disappeared. You could put a headset on and look out of a window in Te Papa across to Matiu / Somes Island in the middle of the harbour, and see it forested and with Māori pa in place. We could pioneer this work and then transfer it to the rest of the world.

“In Europe most of the social record that’s left is just the big stone buildings, the 1000-year old castles and the 18th century mansions, because all the wooden structures have rotted away. We’re left with an idea of how the rich and powerful lived, but not everybody else. With augmented reality, you could recreate a Saxon village where it originally stood, and because it’s virtual, you can easily include much more detail than with a physical recreation.”

The possibilities also extend to town planning.

“You could take a walk down on the waterfront and see a proposed new hotel rising up into the skyline, which would be hugely helpful for assessing the effects on surrounding buildings and businesses.”

To make all this possible, computers need to be able to almost perfectly lock the augmented reality view onto the real view – matching up these two and keeping them locked together while someone moves their head around is a huge computing challenge, known as registration.

Two parts of the problem are tech related – to cope with all the light outside, the headsets need to be much brighter than they are currently capable of, and they also need to be able to manage much more depth perception, without getting woozy.

Neil says the brightness problem is just a matter of the tech giant’s time, and his team’s focus is on using video to enable full horizon registration for the first time ever.

“The idea of using the horizon line to register the real image with the virtual one isn’t new. There’s been good groundwork done on this by a top research team in Italy, but we’ve managed to improve their accuracy ten-fold by doing something quite differently. We use 360-degree camera images and video, rather than just working with the section of the view that’s directly in front. Perhaps counter-intuitively, by giving the algorithm much more information to sort through, it produces much better accuracy.”

The team have been using publicly available digital elevation maps from satellite imagery to test the algorithm’s matching skills. They’ve found their algorithm does a much better job at matching the horizon and is not thrown by the substantial numbers of trees, buildings and other things that obstruct the horizon in most images. It also copes with the challenging situations where the separation between sky and ground isn’t very good, such as in dark, cloudy or misty conditions, when the sea and sky are similar colours, or when there’s strong glare from the sun.

It’s much faster than humans at matching terrain and produces results as good as an expert human. The team are now assessing how little horizon is needed to ensure a good lock.

“We’ve been surprised at how accurate it is, even with as little as an eighth of the horizon visible.”

Neil and the team will be publishing their results later this year, and the next focus will be to accurately identify which objects are in the foreground of the views and which are in the background. It's the next step toward the rich, detailed augmented reality of our imaginations.